The underwriter workflow of the future is closer than you think

Lloyd’s Lab cohort three members hx write for our Mind of the Market series on what the workflow of the future could be for underwriters.

The underwriter workflow of the future is closer than you think

This month saw the release of Lloyd’s of London’s second blueprint. It represents the second phase of the ambitious Future at Lloyds strategy, designed to make their insurance market the most advanced in the world. At its core, it is trying to change the reputation of a market which is traditionally seen as slow to innovate. The argument from some of the industry is that broking and underwriting very large and complex risks is something that is more art than science: the interaction between underwriter and broker is based on relationships and trust built up over years because there just isn’t enough data to automate or delegate these decisions in the way that personal lines or life insurance has. However, the arrival of the new blueprint and our own experience of the speciality pricing industry, indicate that this view is no longer accurate.

At hx, we have a slightly different view of this data deficit in specialty insurance – it’s not that there isn’t enough data: the datasets are just awkward, sparse and scattered across many different sources. We think that tapping into this data to support the traditional broker-underwriter relationship will improve outcomes for all parties in the value chain: better service for the insured party, lower transaction costs and increased profitability for brokers and insurers, and a more interesting place to work with greater career variety. Lloyd’s’ pivot towards a data-centric market will only further enforce this evolving relationship. But what form could such an evolved underwriter's process take? We look at the innovations being developed (or indeed currently in existence) which integrate data at every step to bring the insurance market into the 21st century.

Data and personal relationships are not mutually exclusive

One of the real strengths of the Lloyd’s market is the personal relationships and trust built up over years between insurers and brokers. It ensures that experienced practitioners on both sides review the potential issues relating to a proposed opportunity prior to going on risk. In the event of a claim, the insured can be paid more swiftly and with a much-reduced risk of litigation. However, this means that the underwriting process can be lengthy involving in-person surveys, huge paper files and manual input from actuaries, wordings teams and cat modelling teams. In future iterations of the insurance market, we believe that data can support much of the risk analysis, allowing insurers and brokers to focus on adding value rather than performing thankless administrative work.

Consider the example of a large commercial property risk as it comes to the market . The risk manager at the insured may have put together a large “submission” detailing the thousands of warehouses, offices and various other locations they need cover for. Currently, there would need to be a process for someone to manually convert this into the right format for the internal systems (probably in a spreadsheet) and pass this to underwriting teams and 3rd party organisations for geo-coding and catastrophe modelling. Loss histories may need to be emailed to actuarial pricing teams (again, likely in a spreadsheet). Underwriters then need to decide what further analysis is needed, survey(s) are conducted, specialist wordings drafted, exclusions applied, and a price selected.

In contrast, we see a world where this process can be turbo-charged using a whole new range of skills and a new generation of IT platforms.

Integrating data at every stage

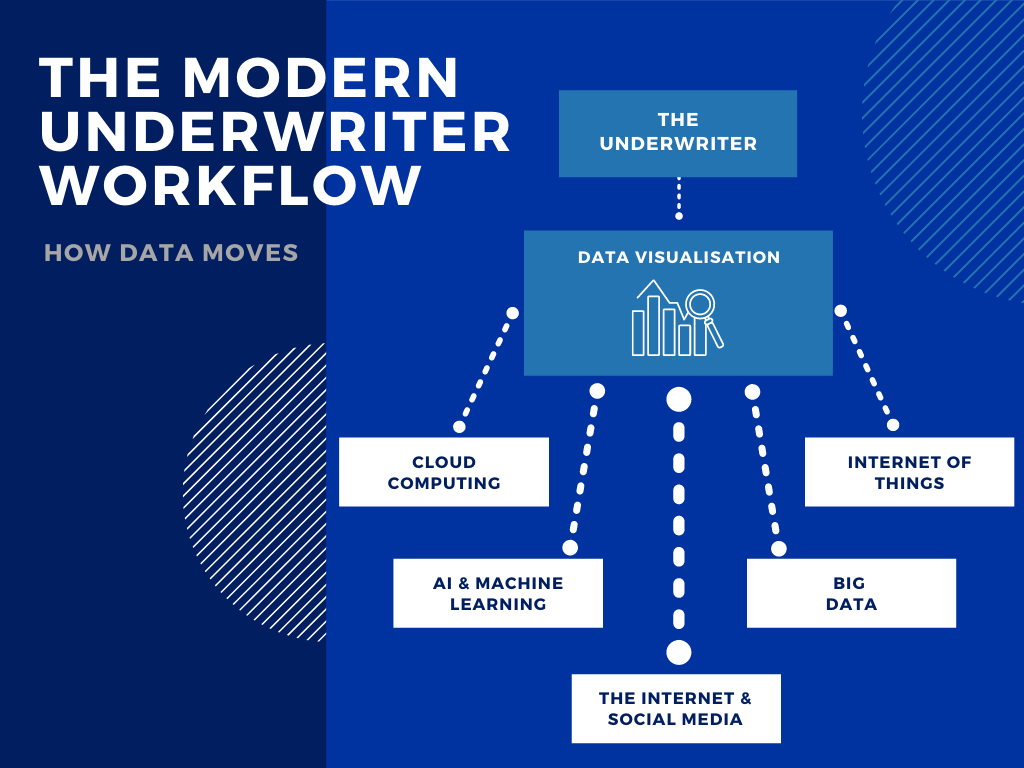

The future of the underwriter workflow is about automating where possible, and integrating data for better decision making. Generic files will be automatically parsed by algorithms powered by machine learning. These processes will be backed by huge amounts of cloud computing power, which will reduce manual data transformation and increase the data quality of the output.

Importantly, this data integration is more than a pipe dream. The insurance industry already holds vast amounts of data. Since the advent of tech giants, many have come to realise that the challenge does not lie in how you generate data, but in how you use it. Machine Learning’s pattern recognition has the power to analyse pools of data to quickly identify the most similar quotes and policies to a risk in an insurer’s systems. This information can be used to quickly identify whether your company is historically likely to quote for the risk, or its relative chances of converting post quote.

Internally, AI will offer insights which enable insurers to make strategic choices. Data analysis can answer important questions like, What is your strategic data-driven view for this broker? Are they meeting their payment terms or have any large claims currently being handled by your teams? Is the commission suggested in line with their normal terms?

Turbocharged pricing and modelling

Having used data to determine the merits of insuring a particular risk, advanced modelling tools can then be used to better price it. Cutting edge pricing and modelling platforms can process larger data sets that standard tools (we’re looking at you, Excel) would struggle to cope with. Importantly, they can integrate into an insurer’s data ecosystem to provide a view of every risk in the context of its portfolio; currently a dream out of reach of most insurers.

The next generation of tools are designed to evolve existing insurance modelling methods . Historical loss records will be automatically loaded into powerful, flexible analytical models (such as those featured in our flagship Renew platform) for review and adjustment by underwriters and actuaries with minimal data processing. This leaves the underwriter to provide value add decision making, rather than repetitive data entry.

Integrating multiple parties in the process

No insurer or underwriter works in isolation. The underwriting process requires input from third parties, often with their own proprietary data sets, to give the most accurate risk assessment. In the insurance market of the future, this is all integrated into a coherent ecosystem. Not only does this reduce errors made by copying data from one program to another, but it streamlines the process. This ensures that the modern insurer can take advantage of the widest possible data analysis when pricing risk.

The range of data sets available for integration will be vast. Looking back at our earlier example, an underwriter could integrate specialist geo-coding services to offer detailed outlines of the property, or constantly updated flood and natural catastrophe data; to automatic analysis of satellite imagery of the property itself. They could integrate financial data for the company in question, including any pending lawsuits or debts attributed to it. They could even integrate industry data to inform of market downturns which should be taken into consideration during pricing. This integration strategy is one pursued by hx, through Renew Connect, which forms a best-of-breed ecosystem for insurers.

The most essential feature of the rise in data analysis, is in the way modern technology can integrate. The next generation of insurers can rely on best-of-breed technology to communicate via APIs seamlessly. It will enable a wider level of collaboration on data and its usage throughout the industry.

A blueprint for the future of insurance

What we hope this piece will demonstrate is that data now sits at the core of the insurance industry, in a way that it has not previously. Beyond the simple collection and analysis of it, the focus is on how to present that data - on how to model it and better understand the relationship between data sets.

Embracing this trend, as the Lloyd’s Lab and hx do, brings a potent combination of more data and and reduced manual administration tasks for the industry as a whole. This will enable more better, faster assessment of risks; in particular, insurers who get it right will spend much less time on repetitive tasks and instead focus on where they can add value. After all, who wants to spend all day filling out Excel spreadsheets?

This isn’t some wild view of the future of specialty insurance. All of the above is happening right now and is driving rapid changes in the insurance industry. hx are at the forefront of this revolution bringing together data providers and insurance systems into a coherent ecosystem as part of Renew Connect and delivering pricing platforms created by a close-knit, business-focused collaboration between actuaries and underwriters. This new approach to underwriting brings together a new generation of talent in the market from data scientists to software developers, data visualisation specialists to digital-first underwriters . By collaborating and learning from each other, the industry can become faster, more efficient and deliver a higher quality of service to its customers.